Serving gRPC-Web requests on Kubernetes with Istio

Overview

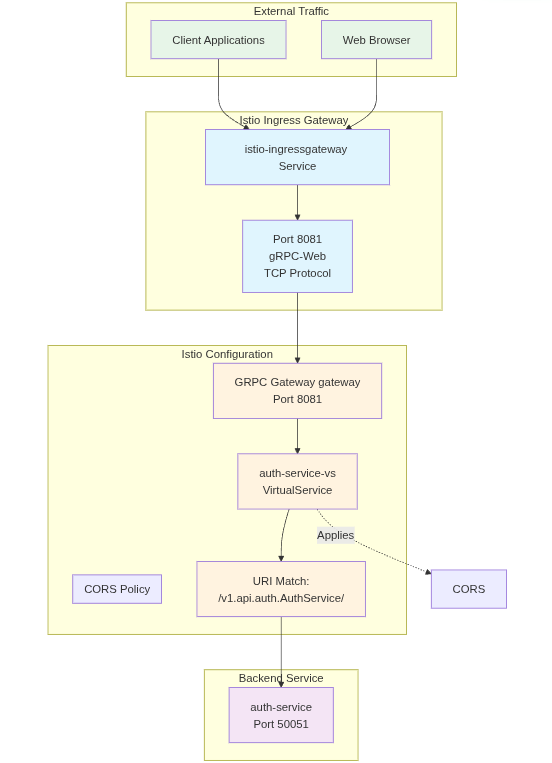

We're leveraging protobufs (gRPC) to optimize the way we communicate across services with oof.gg. As a result, we wanted to leverage the benefits of this strong typing and compression within our ReactJS web application. A drawback of using gRPC natively for web applications is its lack of support via most common browsers, and as a result, gRPC Web was created.

In this deployment, we will be implementing a gRPC Web gateway solution on top of Kubernetes. We experimented with direct Envoy setups, and ultimately fell onto a standard configuration of Istio that leverages Envoy for to proxy-ing gRPC web communications to native gRPC communications to the back-end. This set up combined ease of implementation with flexibility in supporting routing gRPC Web requests and handling standard HTTP 1.1 requests as well.

Prerequisites

Set up your Helm Chart

Set up your helm chart, create your templates directory, and create your auth-service.yaml file, and istio.yaml file.

apiVersion: v2

name: oof-api

description: Helm chart for oof-api infrastructure

type: application

version: 0.1.0

icon: https://example.com/icon.png

appVersion: "1.0.0"

maintainers:

- name: oof-api-team Chart.yaml

Example Auth Service Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: auth-service

namespace: {{ .Release.Namespace }}

spec:

selector:

matchLabels:

app: auth-service

template:

metadata:

labels:

app: auth-service

spec:

containers:

- name: auth-service

image: {{ .Values.authService.image }}

imagePullPolicy: IfNotPresent # Add this line

ports:

- containerPort: {{ .Values.authService.ports.grpc }}

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

volumes:

- name: config-volume

configMap:

name: api-config

items:

- key: config.yaml

path: config.yaml

---

apiVersion: v1

kind: Service

metadata:

name: auth-service

namespace: {{ .Release.Namespace }}

spec:

selector:

app: auth-service

ports:

- name: grpc #It's important to name the port for istio.

protocol: TCP

port: {{ .Values.authService.ports.grpc }}

targetPort: {{ .Values.authService.ports.grpc }}

auth-service.yaml

Istio Setup

For Istio, we'll be using the default configuration, with a basic operator configuration for the sake of the example. In reality you'll want to service TLS endpoints for your product.

Operator Configuration

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: istiocontrolplane

namespace: istio-system

spec:

profile: default

components:

ingressGateways:

- name: istio-ingressgateway

enabled: true

k8s:

service:

ports:

- port: 8080

name: http

targetPort: 8080

- port: 8081

name: grpc-web

protocol: TCP

targetPort: 8081

istio-operator.yaml

Istio Gateway

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: {{ .Release.Name }}-gateway

namespace: {{ .Release.Namespace }}

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 8081

name: grpc-web

protocol: GRPC-WEB

hosts:

- "*"istio.yaml

Auth Service VirtualService

Here's an example VirtualService configuration. Your local version may differ.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: auth-service-vs

namespace: {{ .Release.Namespace }}

spec:

hosts:

- "*"

gateways:

- {{ .Release.Name }}-gateway

http:

- match:

- port: 8081

uri:

prefix: /v1.api.auth.AuthService/

corsPolicy:

allowOrigins:

- exact: "*"

allowMethods:

- POST

- OPTIONS

allowHeaders:

- content-type

- x-grpc-web

- x-user-agent

- authorization

maxAge: "24h"

route:

- destination:

host: auth-service

port:

number: 50051istio.yaml

Configuring the Cluster

Now that we've finalized all the configuration, let's go ahead and lift the deployments and services.

Install Istio

istioctl install --set profile=default -y -f gateway/operator-config.yaml --skip-confirmationLoad your Helm Chart

# Run your helm chart

helm install oof-api . --namespace oof-api --create-namespace --wait --timeout 300sInstall fresh Istio with proper CNI settings

# Installing fresh Istio with proper CNI settings

kubectl get crd gateways.gateway.networking.k8s.io &> /dev/null || \

{ kubectl kustomize "github.com/kubernetes-sigs/gateway-api/config/crd?ref=v1.3.0" | kubectl apply -f -; }Bind your namespace to Istio

kubectl label namespace oof-api istio-injection=enabled --overwriteTest Your Setup

Now, if I've written this Istio walkthrough correctly, your services will respond to gRPC Web requests on port 8081. A request like the one below should receive a response from your gRPC server (a malformed request will not respond with a payload, just headers from Istio).

Request

curl 'http://localhost:8081/v1.api.auth.AuthService/Login' \

-H 'X-User-Agent: grpc-web-javascript/0.1' \

-H 'Accept: application/grpc-web-text' \

-H 'Content-Type: application/grpc-web-text' \

-H 'X-Grpc-Web: 1' \

--data-raw 'PAYLOAD'Response

* Host localhost:8081 was resolved.

* IPv6: ::1

* IPv4: 127.0.0.1

* Trying [::1]:8081...

* Connected to localhost (::1) port 8081

> POST /v1.api.auth.AuthService/Login HTTP/1.1

> Host: localhost:8081

> User-Agent: curl/8.5.0

> X-User-Agent: grpc-web-javascript/0.1

> Accept: application/grpc-web-text

> Content-Type: application/grpc-web-text

> X-Grpc-Web: 1

> Content-Length: 28

>

< HTTP/1.1 200 OK

< content-type: application/grpc-web-text+proto

< x-envoy-upstream-service-time: 108

< date: Wed, 11 Jun 2025 21:26:13 GMT

< server: istio-envoy

< transfer-encoding: chunked

<

* Connection #0 to host localhost left intact

AAAAAiEK/wFleUpoYkdjaU9pSklVekkxT...