Building a basic question-answer agent with LangGraph

When you think of agents, you may think of highly flexible and dynamic agents that can respond to your communication, and reason over your answers. People today are using Large Language Models (LLMs) and agents to solve a variety of complex reasoning problems. But what if you need an agent to stick to a path of questions that need to be answered by the user? For this we develop a simplified question-answer agent architecture using LangGraph that can follow a stream of questions, but allows for the flexibility that comes with human interaction.

| Benefits | Drawbacks |

|---|---|

|

|

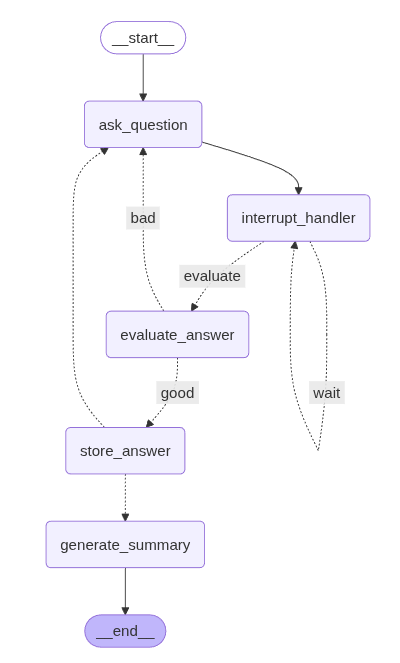

High-Level Agent Architecture

We'll start by selecting an LLM that can run locally on a NVIDIA RTX 3090 (24GB), supports tool-calling, and can reason relatively well in a continuous conversation. On ollama reddit communities, you'll find QwQ-32b generally recommended for a model that can fit in 24GB of VRAM, supported by langchain/langgraph, and supports tool-calling (and DeepSeek-r1 as a follow-up).

The framework used to implement this agent architecture is LangGraph, due to it's graph-like architecture that lends itself well to workflows, and has a lot of debugging capabilities with the generation of mermaid diagrams and native LangSmith integration.

Basic LangGraph Architecture

The architecture primarily focuses on asking a question, executing a user interrupt (allowing the user to answer), and then validating the answer for whether or not it's good to write answer to memory. At the end of all the questions, the system is expected to generate a summary of the question and answers.

Memory

For this example, we're using LangGraph's native checkpoint memory, which saves chat history and associates it to a thread id. It's simply implemented as:

from langgraph.checkpoint.memory import MemorySaver

self.memory = memory or MemorySaver()Main Nodes

Ask Question Node

The ask_question node is straightforward. It takes in from my agent wrapper class an array of questions to ask, and each successful iteration of the LangGraph loop increments the step to the next question.

def ask_question(self, state: CustomAgentState) -> CustomAgentState:

"""Ask the next question and wait for user input."""

# Initialize step if not present

if 'step' not in state:

state['step'] = 0

if 'answers' not in state:

state['answers'] = {}

if 'messages' not in state:

state['messages'] = []

q_index = state['step']

if q_index < len(self.questions) - 1:

question = self.questions[q_index]

print(f"Asking question {q_index + 1}: {question[:50]}...")

# Add system prompt if not already present

state['messages'].append(AIMessage(content=question))

else:

print("❌ NO MORE QUESTIONS TO ASK")

return stateFrom here, it goes onto the evaluate_answer_check edge whether to route back to store_answer or back to ask_question.

Store Answer

Similarly, we're storing the answer along with the question in the state of the conversation, to retrieve them all later in the summarization node.

def store_answer(self, state: CustomAgentState) -> CustomAgentState:

"""Store the current answer and move to next step."""

step = state["step"]

question = self.questions[step]

if step < len(self.questions):

question = self.questions[step]

human_messages = [m for m in state["messages"] if isinstance(m, HumanMessage)]

if human_messages:

last = human_messages[-1]

state["answers"][question] = last.content

print(f"✅ Stored answer for step {step}")

state["step"] += 1

print(f"✅ Incremented step to: {state['step']}")

return stateGenerate Summary

After all questions have been answered, in our flow we're looking to summarize and output a final response to the user. We do this using an LLM for inference.

def generate_summary(self, state: CustomAgentState) -> CustomAgentState:

"""Generate final summary."""

qa_summary = "\n".join(f"{k}: {v}" for k, v in state["answers"].items())

template = "Some formatting for the output"

prompt = "Generate a summary based on the following questions -- follow the template format:\n{qa_summary}\n\n{template}"

summary = self.llm.invoke([HumanMessage(content=prompt)])

state["messages"].append(AIMessage(content=summary.content))

return stateConditional Edges

In LangGraph, you can do a lot of logical routing (with or without LLM inference) using conditional edges. In the case of an interviewer agent, we run inference inside the conditional edge to make a routing decision.

Evaluate Answer Check

In the case of my evaluate_answer_check edge, it will always eval to "good" unless some basic conditions are not met, but you can generally modify this conditional edge to eval how you want.

def evaluate_answer_check(self, state: CustomAgentState) -> str:

"""Router that evaluates answer quality and returns routing decision."""

human_messages = [m for m in state["messages"] if isinstance(m, HumanMessage)]

if not human_messages:

print("No human messages found to evaluate.")

return "bad"

last = human_messages[-1]

answer = last.content.strip()

# More lenient evaluation

if len(answer.split()) < 2:

print("❌ Answer too short - returning BAD")

return "bad"

# insert eval llm call here (e.g. calling an llm to assess the question, and to return good/bad can be an approach here)

print("✅ Answer acceptable - returning GOOD")

return "good"As an example, you could run reasoning logic within in the edge to infer on whether or not the answer goes with the question, and from there decide whether or not to store the answer, or rephrase the question.

Human Input Check

This human_input_check edge specifically is expecting for a user to write an input. If the human input is written, then we are to move into the evaluation logic. The interrupt is a key point here. Without the interrupt, the graph would continue from start to end, with no pause for user interaction.

You can work with a LangGraph workflow that can interact with a user without interrupts, but the most reliable approach I've found with lower fidelity models is to control the state more with explicit interrupts. For better performing LLMs (i.e. Claude Sonnet 3.7, GPT4+), you can in fact allow a ReAct agent decide when to ask a question, store an answer, and so forth using tool-calling.

def human_input_check(self, state: CustomAgentState) -> str:

"""Check if we have human input - this is where we 'interrupt'."""

human_messages = [m for m in state["messages"] if isinstance(m, HumanMessage)]

# If no human messages since the last question, we need to wait

q_index = state["step"]

if len(human_messages) < q_index + 1:

# No new human input - trigger interrupt at graph level

print("❌ TRIGGERING WAIT - No new input")

interrupt("Waiting for user input")

return "wait" # This won't be reached due to interrupt

# We have input, proceed to evaluation

return "evaluate"

Decide After Next

The conditional edge decide_next_after_store is the edge that determines whether or not to ask the ask_question, or route to generate_summary depending on how many questions remaining to ask.

def decide_next_after_store(self, state: CustomAgentState) -> str:

"""Decide what to do after storing an answer."""

if state["step"] < len(self.questions) - 1:

print("❌ Still more questions")

return "ask_question"

else:

print("✅ All questions answered - generating summary")

return "generate_summary"Putting it all together

The graph below represents the python implementation of the mermaid diagram above.

builder = StateGraph(CustomAgentState)

builder.add_node("ask_question", self.ask_question)

builder.add_node("interrupt_handler", self.interrupt_handler)

builder.add_node("evaluate_answer", self.evaluate_answer)

builder.add_node("store_answer", self.store_answer)

builder.add_node("generate_summary", self.generate_summary)

# Set entry point

builder.add_edge(START, "ask_question")

builder.add_edge("ask_question", "interrupt_handler")

# After interrupt handler, check for human input

builder.add_conditional_edges(

"interrupt_handler",

self.human_input_check,

{

"wait": "interrupt_handler", # Loop back if no input

"evaluate": "evaluate_answer" # Proceed if we have input

}

)

# After evaluation node, use router to decide path

builder.add_conditional_edges(

"evaluate_answer",

self.evaluate_answer_check, # Use router function

{

"good": "store_answer",

"bad": "ask_question"

}

)

# After storing answer, decide next step

builder.add_conditional_edges(

"store_answer",

self.decide_next_after_store,

{

"ask_question": "ask_question",

"generate_summary": "generate_summary"

}

)

# End after summary

builder.add_edge("generate_summary", END)

graph = builder.compile(checkpointer=self.memory)Calling the Graph Workflow

Calling the graph after compiling it above is pretty straightforward. Depending on your setup you may want to graph.stream or graph.invoke, so the choice is yours in this regard.

# Set some configuration

config = {"configurable": {"thread_id": "some_thread_id"}

# Fire off the initial state (e.g. ask first question)

initial_state = {"step": 0, "answers": {}, "messages": []}

graph.stream(initial_state, config=config)

# Update the state when the user responds (resume)

user_input = "Hello world"

graph.update_state(config, {"messages": [HumanMessage(content=user_input)]})To learn more about LangGraph, visit their full documentation below.